Earlier this month, Apple revealed new features baked into most of its major operating systems. Each of them, of which there are three, are designed to help protect children against sexual abuse and exploitation. And while these are hailed as good things, on a grand scale, it’s the finer details in regards to one of the features in particular that has many people concerned.

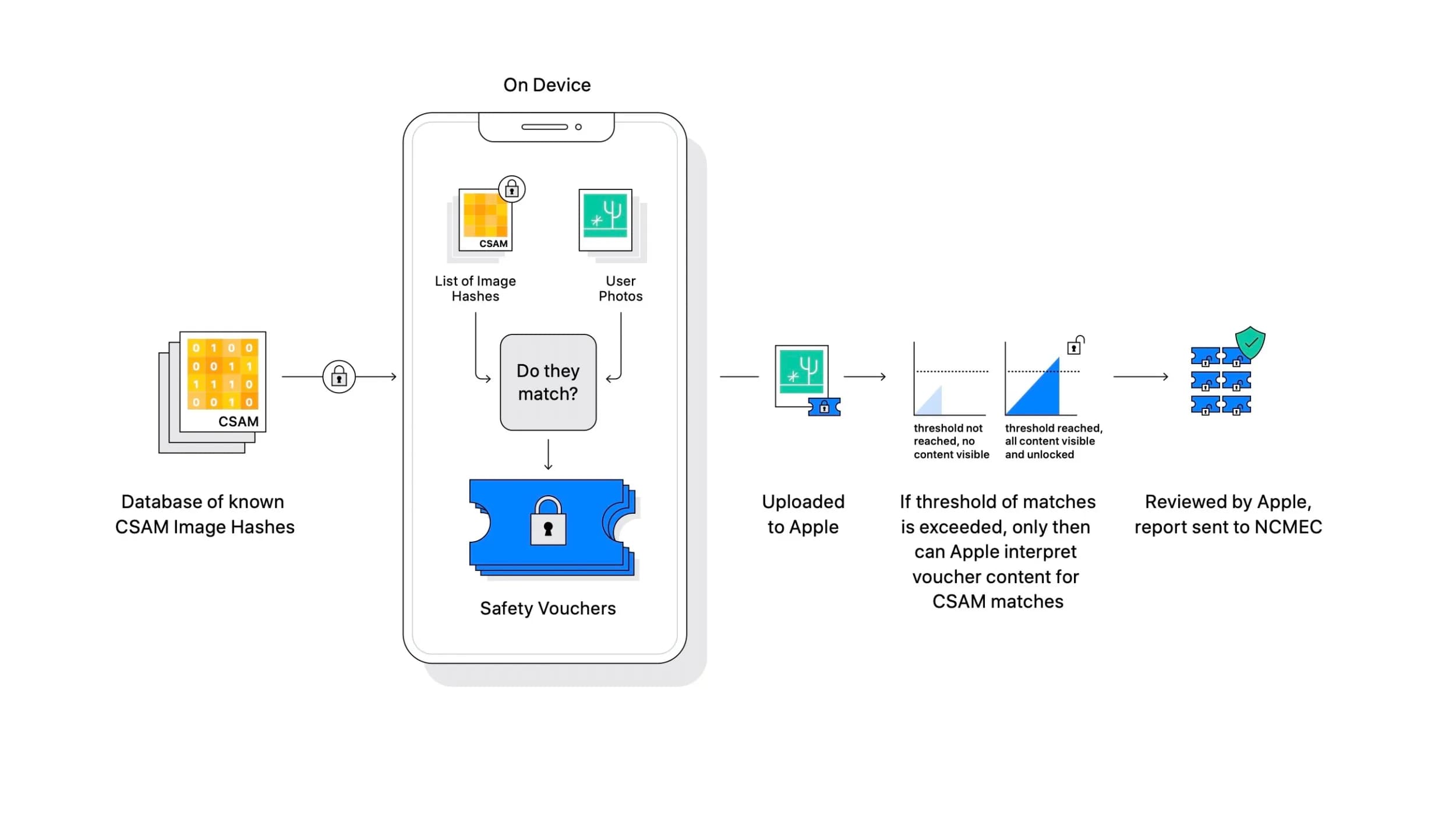

That general distrust isn’t so much in regards to Apple directly. Most of it circles around the idea that Apple could be laying the groundwork to being forced to use these new features, specifically photo scanning for specific known hashes, in less-than-positive ways. Many believe we’re firmly on the precipice of a very slippery slope with the ability to scan iCloud Photo libraries for known hashes related to child sexual abuse material, or CSAM.

So while Apple’s overall goal is good, it’s the implementation of tools to reach that goal that has some folks spooked — or otherwise unhappy with Apple and its perceived overstepping.

So, it shouldn’t be a surprise that ever since Apple unveiled these new features, the company has had to try and convince folks why they are okay, why Apple won’t abuse them, why Apple won’t let other entities abuse them, and why everything will be okay. That started out with an internal memo from a software VP at Apple. And it’s blossomed into a dedicated FAQ and even an interview with Apple’s Head of Privacy.

And today, Apple’s back at it again with another document. This one’s entitled, “Security Threat Model Review of Apple’s Child Safety Features” (via MacRumors). In it, Apple’s aiming to lay out the privacy and security that’s baked into not just iOS, macOS, and watchOS (where these features will be present at launch), but how this security and privacy lays the groundwork for these features in general.

Still, the document itself echoes what Apple’s executives have already said. That includes Apple finally confirming that the CSAM scanning for specific known hashes will have a threshold of 30 images. Up until recently Apple wasn’t letting anyone know what that threshold was. If an iCloud Photo library reaches taht threshold, only then will Apple make a case against the account holder. After that, the account will then be handed off for a manual review before any further steps are taken.

For example, here are the safety and privacy requirements related to these features:

We formalize the above design principles into the following security and privacy re- quirements.

• Source image correctness: there must be extremely high confidence that only CSAM images – and no other images – were used to generate the encrypted percep- tual CSAM hash database that is part of the Apple operating systems which support this feature.

• Database update transparency: it must not be possible to surreptitiously change the encrypted CSAM database that’s used by the process.

• Matching software correctness: it must be possible to verify that the matching software compares photos only against the encrypted CSAM hash database, and against nothing else.

• Matching software transparency: it must not be possible to surreptitiously change the software performing the blinded matching against the encrypted CSAM database.

• Database and software universality: it must not be possible to target specific ac- counts with a different encrypted CSAM database, or with different software perform- ing the blinded matching.

• Data access restriction: the matching process must only reveal CSAM, and must learn no information about any non-CSAM image.

• False positive rejection: there must be extremely high confidence that the process will not falsely flag accounts.

The full document is somewhat technical, but still strives to make things as clear as possible. It’s worth a read if these new features have you on the fence.

It’s also worth noting that, while these features will only be available in the United States and present for first-party apps, that won’t always be the case. Apple has confirmed that a global expansion is in the cards, but the company will be taking a country-by-country review before the features go live in any other markets. What’s more, Apple says that it’s not ruling out bringing these features to third-party apps, but there’s no timeframe for that, either.