Apple can make waves with all sorts of types of announcements. Take for example its recent unveiling of new Expanded Protections for Children. This is a suite of features, three in total, that are aimed at helping protect children from abuse and exploitation. It’s also meant to give even more information and resources to those who might be at risk.

Ever since, Apple has been trying to squash any fears or doubts related to these features. Especially when it comes to scanning iCloud Photo libraries, where one of the new features searches for known child sexual abuse material (CSAM). Many have said that this new feature in particular could lead to some less-than-altruistic efforts from some groups and governments.

But Apple doesn’t appear to be giving up on the idea or its implementation. It has even provided a dedicated FAQ trying to clear the air. And apparently the company recently hosted a Q&A session with reporters (via MacRumors) about these new features.

It was during this session that the company revealed that adding third-party app support for these child protection features is a goal. The company didn’t provide any examples of what that might look like in the future, nor did it provide a timetable for when this third-party app support might come to fruition.

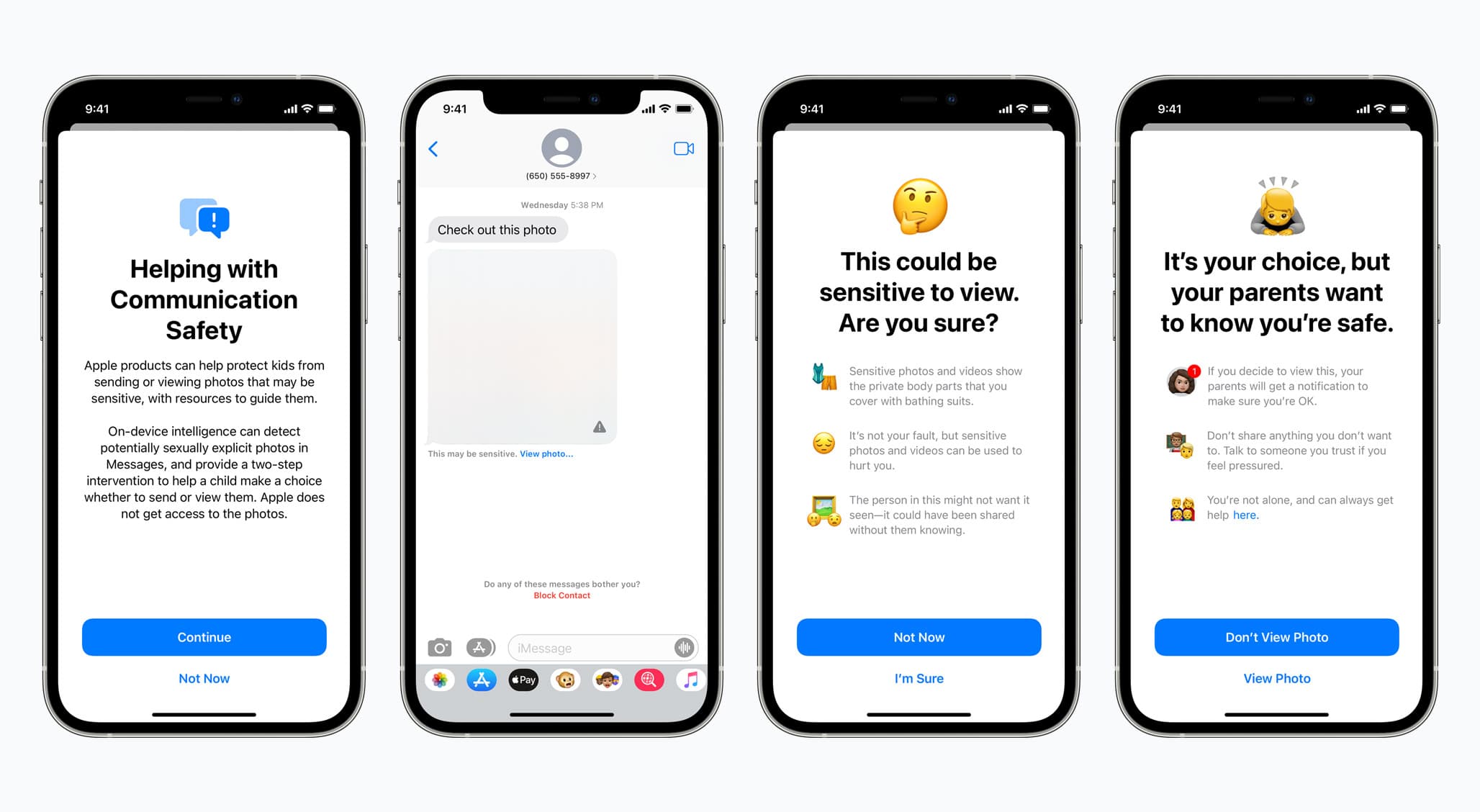

However, one potential option is the Communication Safety feature. At launch, this feature will make it possible for iMessage to detect when an iOS/iPadOS/macOS user sends and/or receives potentially sexually explicit images via the messaging app. Apple could roll out support for a third-party app like Instagram or other messaging apps, too.

Photo storage apps that rely on the cloud could take advantage of the CSAM-detection tool, too.

Per the original report, Apple says any expansion to third-party apps would not undermine the overall security protections or user privacy inherent to these features for first-party apps/services:

Apple did not provide a timeframe as to when the child safety features could expand to third parties, noting that it has still has to complete testing and deployment of the features, and the company also said it would need to ensure that any potential expansion would not undermine the privacy properties or effectiveness of the features.

Apple is putting out a lot of fires here. Which makes sense. Not only is the company dealing directly with child exploitation and sexual abuse, in trying to stamp it out, but it’s doing so in a way that many people believe is Big Brother-esque in its surveillance implementation.

It does sound like Apple is trying to make it so these features remain used for good, but only time will tell if that remains to be the case well into the future.