Early today, Apple released a dedicated frequently asked questions (FAQ) document regarding Expanded Protections for Children. This is the company’s new initiative to help protect children against exploitation and abuse. While the intentions are good, it has ruffled a lot of feathers. Especially regarding one feature in the new protections.

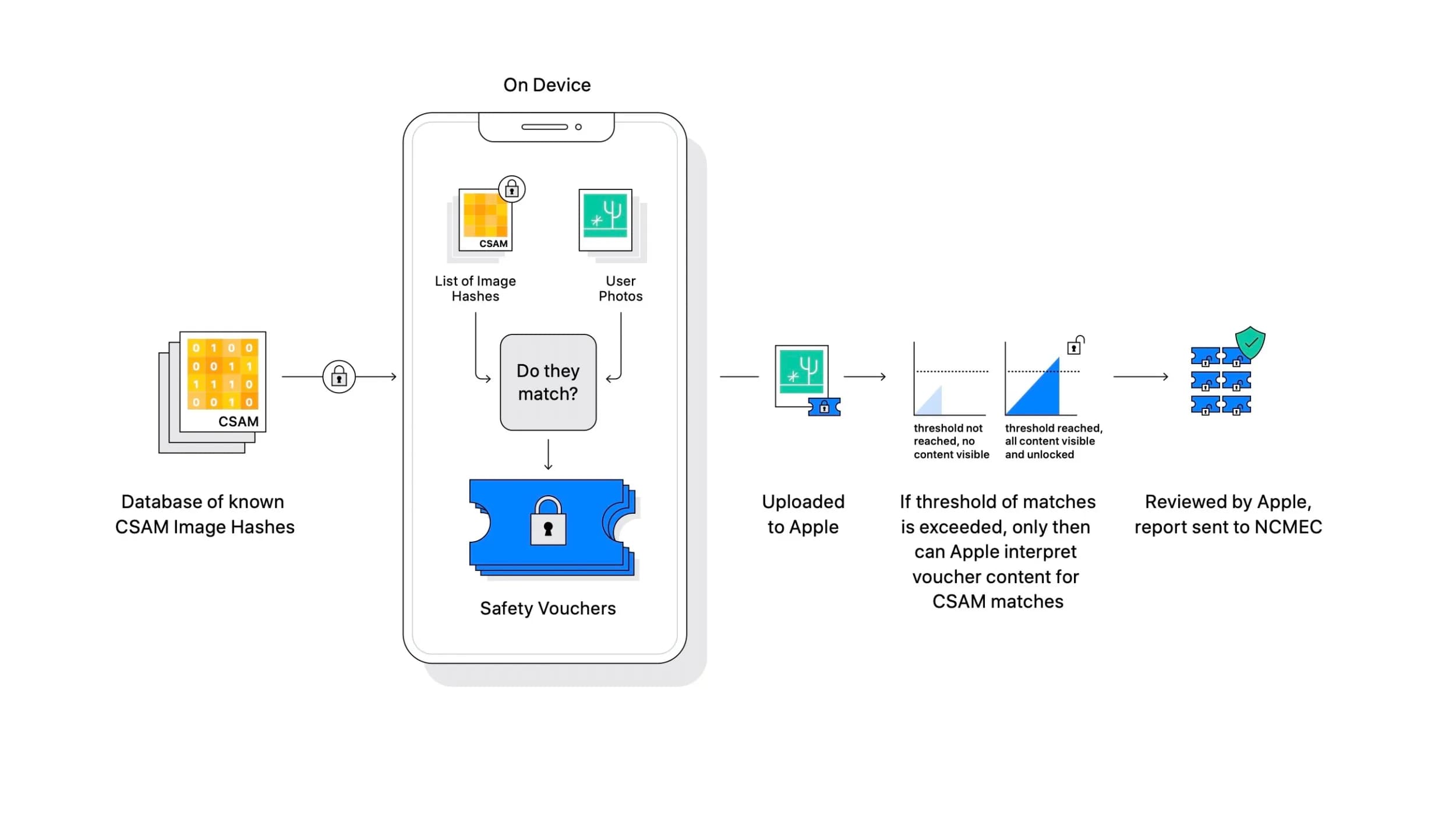

The new protections for children are a three-fold effort, with photo scanning, new guidance for search and Siri, and detecting potentially explicit photos being sent and/or received via iMessage. It’s the photo scanning, which is only available when photos are uploaded to iCloud Photo libraries, that has raised most of the concerns.

Since unveiling the new features, Apple has voiced its support of the new initiative, and tried to blow out some of the fire surrounding it. One software VP sent an internal memo trying to do just that, doubling down on the company’s privacy and user security efforts, while saying that trying to protect children in the way the company is trying is well-intentioned.

However, it isn’t so much Apple’s implementation of these features that has people up-in-arms. Most people, anyway. It’s the implications of these features and the potential for a “slippery slope” that can happen afterwards. There are many who believe these systems can be abused by individuals and groups, especially governments aiming to crack down on citizens in one way or another.

Apple has said that the photo detection feature, which looks for known child sexual abuse material (CSAM) will only be available in the United States when the feature launches in iOS 15 later this year. The company says global expansion is possible, but it will be taking a country-by-country review before rolling out the feature to more regions.

And now the company is back at it, releasing a dedicated FAQ regarding communication safety in iMessages and CSAM detection in iCloud Photo libraries. Apple answers how these features are different, and much more. It dives deep with CSAM detection, while keeping things simple enough to parse quickly enough.

For example, Apple answers the question if this photo scanning is going to scan every photo on your iPhone:

No. By design, this feature only applies to photos that the user chooses to upload to iCloud Photos, and even then Apple only learns about accounts that are storing collections of known CSAM images, and only the images that match to known CSAM. The system does not work for users who have iCloud Photos disabled. This feature does not work on your private iPhone pho- to library on the device.

Apple also notes in the FAQ that Apple’s implementation for CSAM is designed to not discover other content, other than CSAM. The system only works with CSAM hashes that have been provided by the National Center for Missing and Exploited Children. That means the system isn’t designed to detect anything else, other than that material.

As for governments forcing Apple to add non-CSAM hashes that can be discovered? Apple says that’s not going to happen:

Apple will refuse any such demands. Apple’s CSAM detection capability is built solely to detect known CSAM images stored in iCloud Photos that have been identified by experts at NCMEC and other child safety groups. We have faced demands to build and deploy government-man- dated changes that degrade the privacy of users before, and have steadfastly refused those demands. We will continue to refuse them in the future. Let us be clear, this technology is limit- ed to detecting CSAM stored in iCloud and we will not accede to any government’s request to expand it. Furthermore, Apple conducts human review before making a report to NCMEC. In a case where the system flags photos that do not match known CSAM images, the account would not be disabled and no report would be filed to NCMEC.

Apple also says that non-CSAM hashes should not be able to be “injected” into the system for detection, either.

Our process is designed to prevent that from happening. The set of image hashes used for matching are from known, existing images of CSAM that have been acquired and validated by child safety organizations. Apple does not add to the set of known CSAM image hashes. The same set of hashes is stored in the operating system of every iPhone and iPad user, so targeted attacks against only specific individuals are not possible under our design. Finally, there is no automated reporting to law enforcement, and Apple conducts human review before making a report to NCMEC. In the unlikely event of the system flagging images that do not match known CSAM images, the account would not be disabled and no report would be filed to NCMEC.

Whether or not this actually leads people to being more comfortable with these features remains to be seen. How do you feel about it all?