User privacy and security is a major focal point for Apple. Safety, too. Making sure that a user is able to keep their data safe, for instance, is one of the selling points of iOS in general. So it makes sense that Apple would want to broaden that scope, especially as it relates to protecting children.

Today, Apple is revealing its latest efforts in that endeavor. The company is previewing new features coming to its major operating systems later this year. These new features will be baked into the software after an update, which will arrive on an undisclosed date. What’s more, Apple also confirmed that the new features will only be available in the United States at launch, but Apple is looking to expand beyond those borders in the future.

There are three areas Apple is focusing on. CSAM detection, communication safety, and expanding guidance in search and Siri. You can check them all out in greater detail in Apple’s announcement. Below, you’ll find them in brief.

Communication

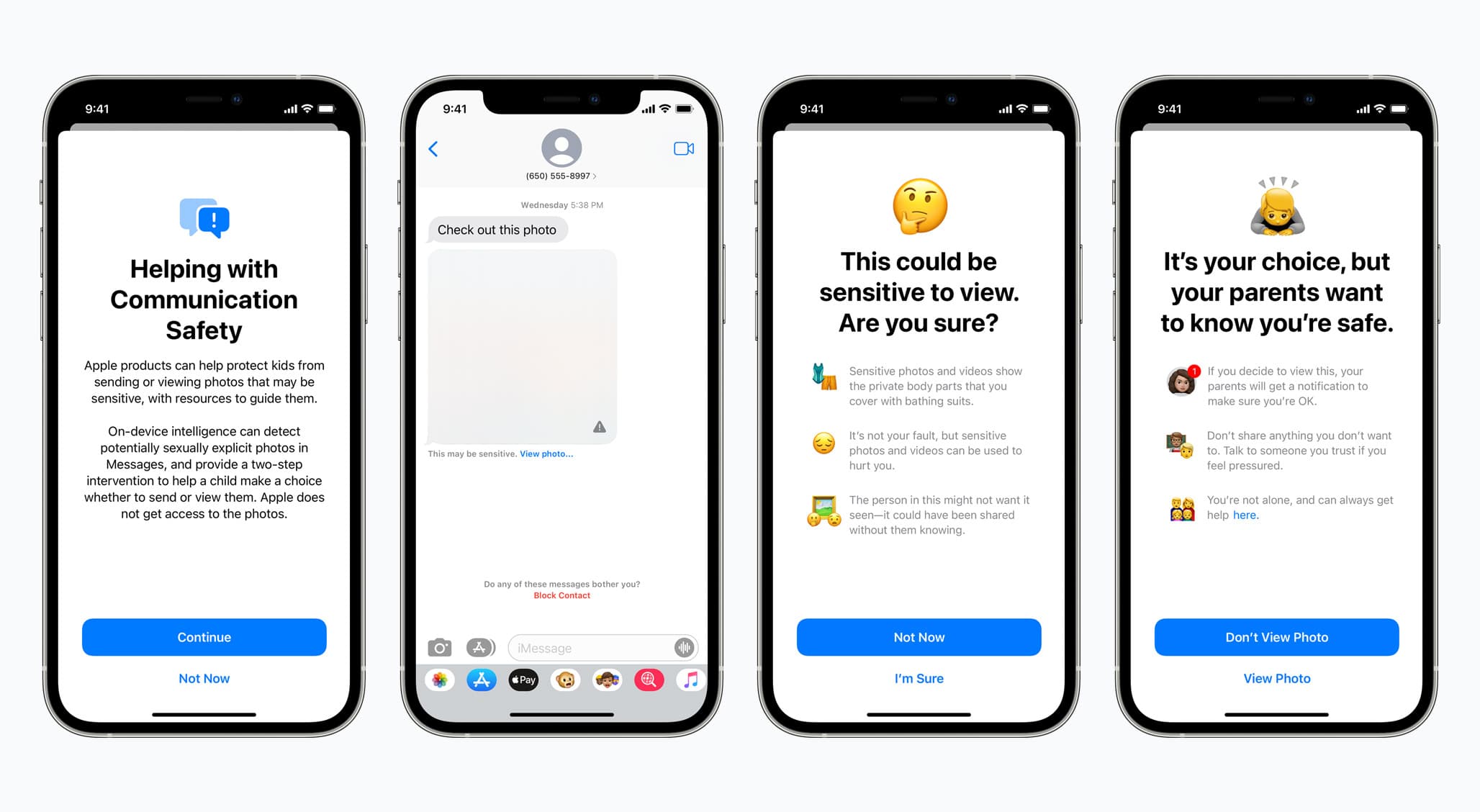

First, one of the most potentially dangerous areas for children. Communication. With this new focus, Messages will now let children and their parents know when they might be sending explicit photos within the Messages app. This goes for receiving these types of photos, too.

Per the announcement today:

When receiving this type of content, the photo will be blurred and the child will be warned, presented with helpful resources, and reassured it is okay if they do not want to view this photo. As an additional precaution, the child can also be told that, to make sure they are safe, their parents will get a message if they do view it. Similar protections are available if a child attempts to send sexually explicit photos. The child will be warned before the photo is sent, and the parents can receive a message if the child chooses to send it.

This particular feature will be available for accounts that are set up as families, and it will be available in iOS 15, iPadOS 15, and macOS 12 Monterey.

Apple says on-device machine learning (ML) will be handling the photo discovery element. This is inherently designed so that Apple itself cannot gain access to the images.

CSAM detection

Child Sexual Abuse Material (CSAM) is another area that Apple will be focusing on. This material shows children in explicit activities, and Apple aims to crack down on it. To help, Apple is going to implement technology that will make it possible to scan user iCloud Photo Library content, and identify any CSAM. To that end, Apple will then be able to report the discoveries to the National Center for Missing and Exploited Children (NCMEC), which is a comprehensive reporting center.

From the announcement:

Apple’s method of detecting known CSAM is designed with user privacy in mind. Instead of scanning images in the cloud, the system performs on-device matching using a database of known CSAM image hashes provided by NCMEC and other child safety organizations. Apple further transforms this database into an unreadable set of hashes that is securely stored on users’ devices.

Before an image is stored in iCloud Photos, an on-device matching process is performed for that image against the known CSAM hashes. This matching process is powered by a cryptographic technology called private set intersection, which determines if there is a match without revealing the result. The device creates a cryptographic safety voucher that encodes the match result along with additional encrypted data about the image. This voucher is uploaded to iCloud Photos along with the image.

To make sure that Apple itself cannot interpret photos without necessity (basically, unless it’s pinged by the reporting/discovery tools), there is also what’s called “threshold secret sharing”:

Using another technology called threshold secret sharing, the system ensures the contents of the safety vouchers cannot be interpreted by Apple unless the iCloud Photos account crosses a threshold of known CSAM content. The threshold is set to provide an extremely high level of accuracy and ensures less than a one in one trillion chance per year of incorrectly flagging a given account.

Only when the threshold is exceeded does the cryptographic technology allow Apple to interpret the contents of the safety vouchers associated with the matching CSAM images. Apple then manually reviews each report to confirm there is a match, disables the user’s account, and sends a report to NCMEC. If a user feels their account has been mistakenly flagged they can file an appeal to have their account reinstated.

It’s worth noting that if a user turns off iCloud Photos, this feature will not work.

Search and Siri

And the third element is expanding guidance for Siri and search. This is meant to help parents and children stay safe while online, as well as during “unsafe situations.” With this new feature implemented, users will be able to ask Siri directly where they can report CSAM or child exploitation, and then be directed to resources.

If someone tries to search with Siri, or the search function in general, the software itself will redirect the search and inform the user “that interest in this topic is harmful and problematic.” The feature will then suggest resources to help with the issue.

Apple says these updates for search and Siri are coming in iOS 15, iPadOS 15, macOS 12 Monterey, and watchOS 8.

Go check out Apple’s full announcement for more.