Every once in a while, Apple manages to do something that is Very Controversial. It doesn’t happen all the time, and usually it’s hardware related. Like the butterfly keyboard. Or the Touch Bar. Or how you charge a Magic Mouse 2. Things like that.

But then sometimes the company just swings for the fences and goes for it, you know? Let’s not forget when Siri recordings were being heard by people who probably shouldn’t have been hearing them. (Not that this was just an Apple problem, mind.) And then there was the whole iPhone throttling issue. Further back, the celebrity photo dump that saw a lot of private photos out there in the wild. (Not that this was Apple’s doing, but it certainly falls within the same parameters.)

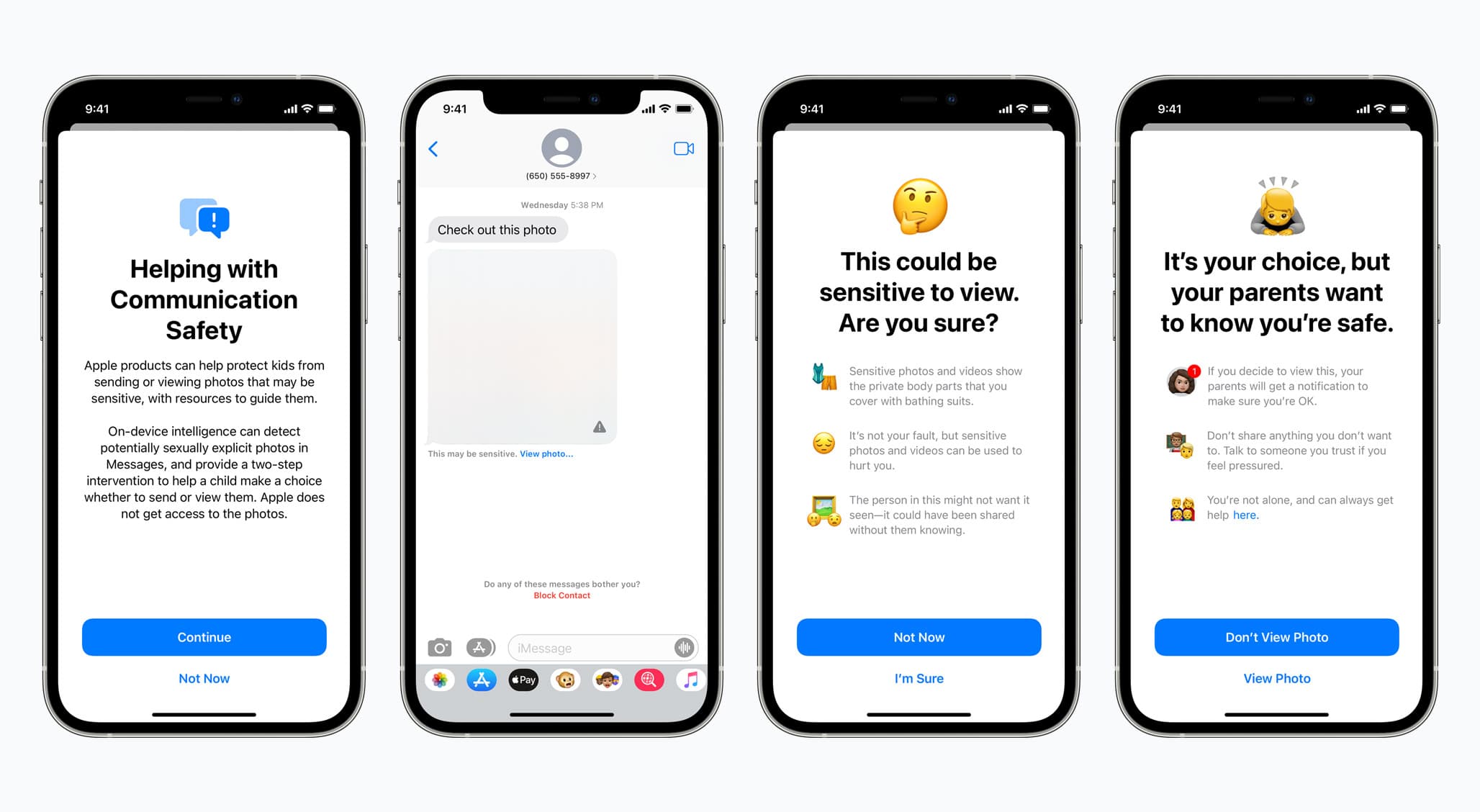

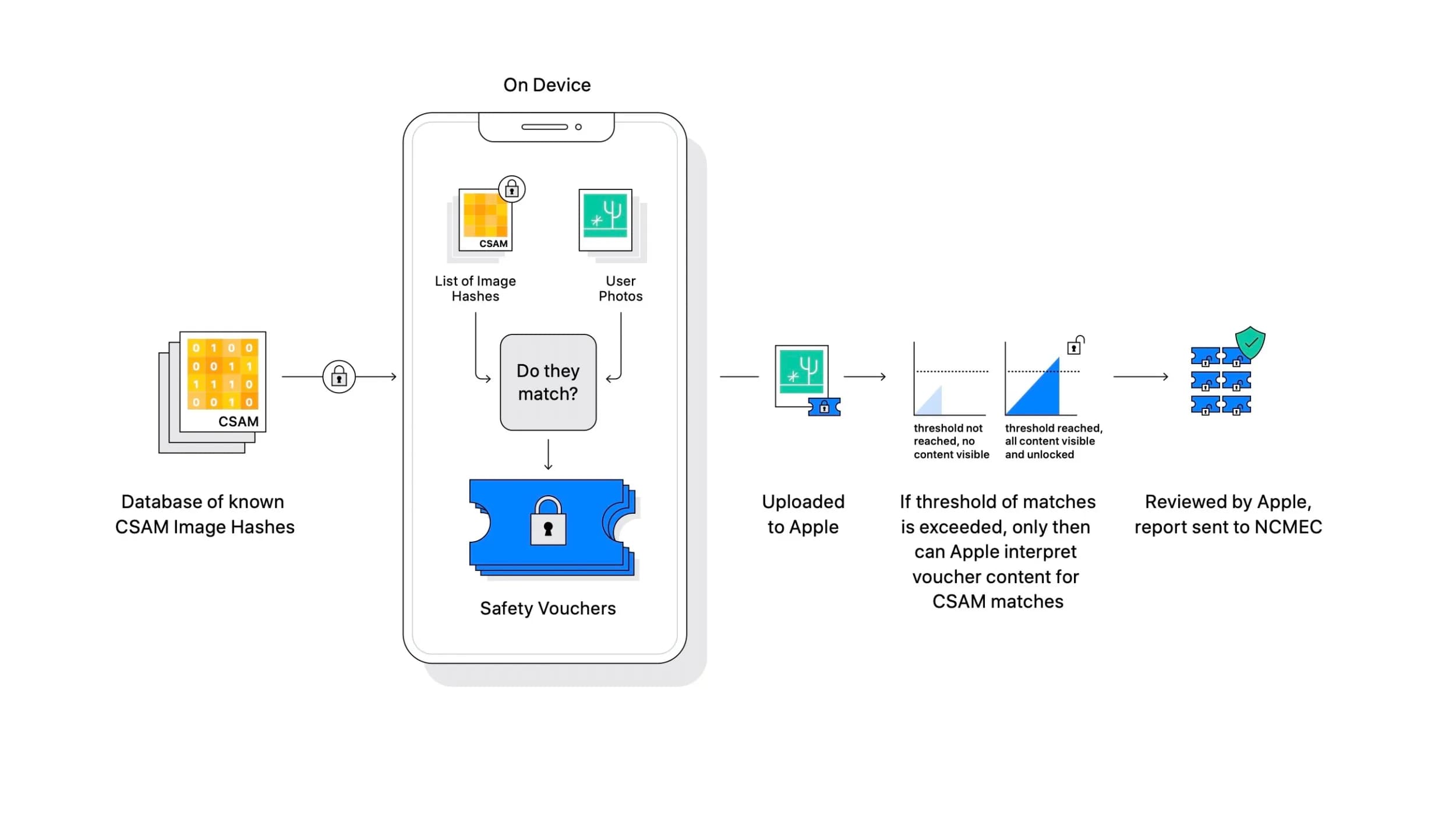

The company was back at it again yesterday when it unveiled what it calls Expanded Protections for Children. These new features are coming soon to iOS 15, iPadOS 15, macOS 12 Monterey, and watchOS 8. With them, Apple is hoping it can help reduce child exploitation and abuse. There are three major components to the effort: scanning iCloud Photo libraries for what’s called child sexual abuse material, or CSAM; better knowledge related to child exploitation and abuse found within search and Siri; and warnings for sending and/or receiving potentially explicit photos while using iMessage.

Better knowledge and information shared within search and Siri is good news. Warnings for parents with young kids using iMessage that they might be receiving, or sending, potentially explicit content is a good thing. But the line gets a bit wavy when you start telling people a major company, even Apple, is going to automatically scan your photo library.

Even if it’s just looking for specific markers within photos that relate directly to child exploitation, it’s a step too far for a lot of people.

Not just that, though. It’s about that “slippery slope” we hear so much about, especially as it relates to technology. What starts off as a great idea can eventually transform into something much worse. Something much uglier. Indeed, Apple’s software implementation is looking for specific image hashes that have been tied directly to CSAM. Many have pointed out that those hashes could be broadened to include things like political propaganda, or just about anything depending on where the hash libraries are coming from.

An authoritative government, for instance, could crack down on a lot with this feature baked into phones widely available.

For its part and in its current state, Apple says that false positives have been whittled down to an astonishing one in a trillion. It didn’t reveal what the threshold is, but the company won’t be taking action on a single photo that includes the CSAM hashes. The company appears to be making the necessary moves to avoid any major issues once the features roll out in the future. All in an effort to make kids safer.

Which is something I believe most people can agree with. The trouble is how Apple is actually doing it. Some people have said it’s motoring towards a Minority Report-like future, where Apple is an all-seeing eye ready to drop the hammer on some person at any given moment. Some cite that fact that these features can be twisted into something much worse, used for negative actions against the users they are trying to protect (and others). And some say Apple is simply doing what it can to distance itself from the problem at hand.

In that latter point, it looks something like this: Apple rolls these features out, and they work flawlessly (which we hope is the case). However, the perpetrators know that to avoid Apple’s all-seeing eye, they just need to turn off iCloud Photo. They know they stop sharing photos through iMessage. And if this happens on a macro level, Apple’s direct connection to people sharing this kind of content is severed. They are no longer using Apple’s iCloud or Apple’s iMessage, but probably third-party options instead. Nothing Apple can do about that, right?

And on the other side of that coin? Well, things keep working out great, and this feature is forgotten about essentially. It’s just another cog in the iOS machine. Which means that some person out there will get caught, or more than one, and then Apple’s software does the right thing! Apple can say it’s Expanded Protections for Children really do work, so praise Apple.

Even if you trust Apple, and I consider myself someone who does as much as possible, all things considered, it’s hard not to be a little pessimistic about this. Yes, the drastic reduction in potential false positives with the photo scanning is good. But should Apple be automatically scanning our photo libraries at all? Does this mean Apple is automatically suggesting every person is a potential criminal? Should a third-party security/privacy firm be handling this implementation, rather than Apple itself?

Should Apple be doing this at all?

What do you think?