It should come as no surprise that Apple has had to go out of its way a bit to offer more context related to some of the newest features coming soon to its major platforms. With it being centered around child protections, but using some invasive efforts to get there, people are concerned the company might be overstepping. In an effort to assuage fears and concerns, Apple’s tried to shine as much light on the new features as possible.

The goal is to maintain that Apple isn’t giving up on user privacy and/or security. Those are still core tenets to the company’s business model as a whole. In a new interview with TechCrunch, Apple’s head of privacy Erik Neuenschwander echoes that sentiment. Apple is trying to stop the spread of child sexual abuse material (CSAM) with these new features, which is accepted, generally speaking, as being a good thing.

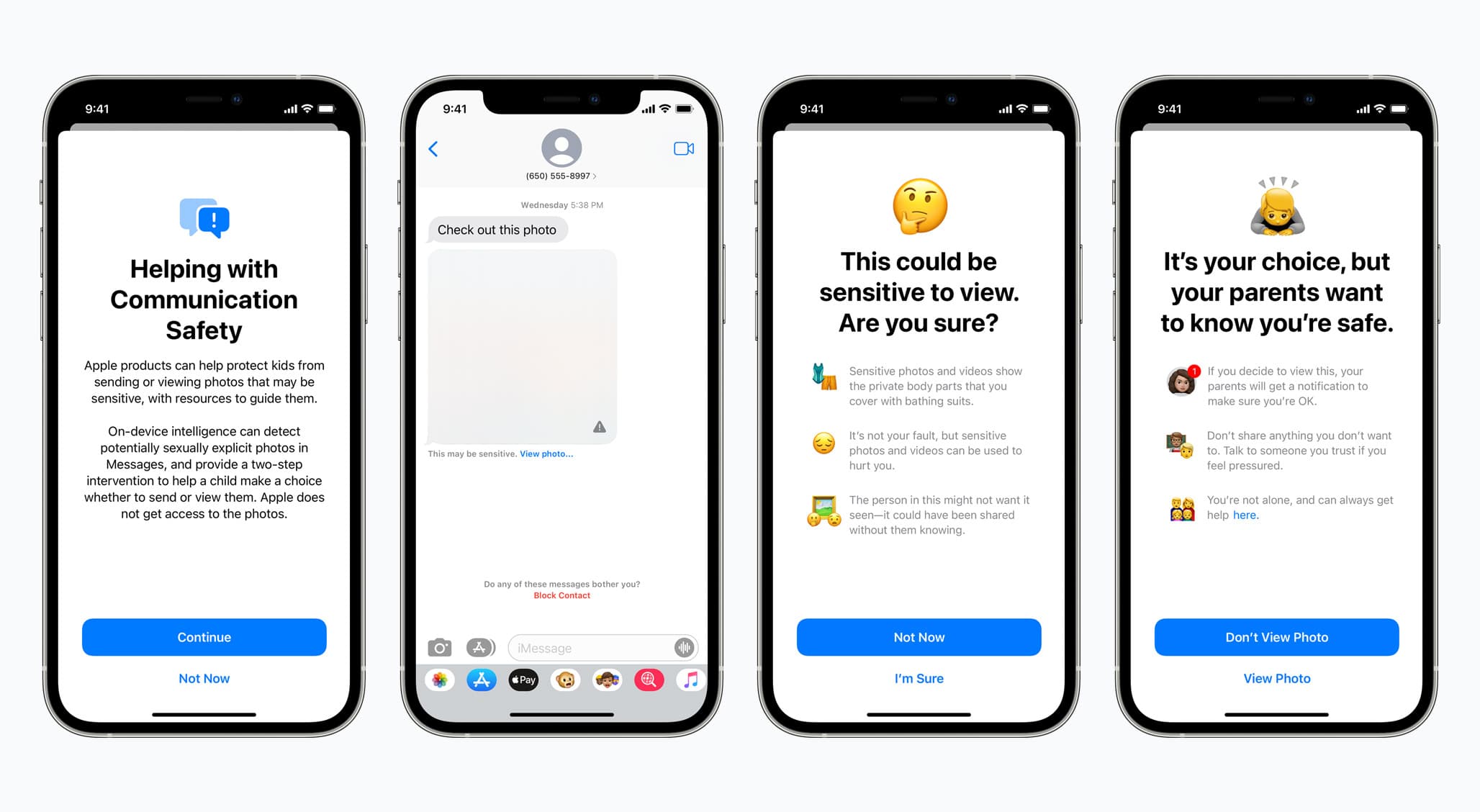

There are three new features being bundled together as part of Apple’s efforts. One of them is the ability to scan for potentially explicit photos being sent and/or received via iMessage. Another is more guidance and knowledge baked into search and Siri. And, finally, there is the photo scanning of iCloud Photo libraries. This feature, which is limited in its scope by design, has left many wondering what might happen next if the technology were to essentially get into the wrong hands, or be hijacked by government officials.

In the new interview with TC, Neuenschwander says that maintaining personal security and user privacy is critical to Apple’s mission moving forward. That hasn’t changed. In fact, it’s one of the reasons why it has taken this long for Apple to rollout features like these, especially when it comes to scanning photos for known CSAM hashes.

Neuenschwander was asked why now, and this was his response:

Why now comes down to the fact that we’ve now got the technology that can balance strong child safety and user privacy. This is an area we’ve been looking at for some time, including current state of the art techniques which mostly involves scanning through entire contents of users libraries on cloud services that — as you point out — isn’t something that we’ve ever done; to look through user’s iCloud Photos. This system doesn’t change that either, it neither looks through data on the device, nor does it look through all photos in iCloud Photos. Instead what it does is gives us a new ability to identify accounts which are starting collections of known CSAM.

Leaving privacy “undisturbed” by individuals and groups not partaking in illegal activity is a focal point for Apple, especially as it relates to these new features. The Head of Privacy at Apple was asked if the company is rolling out these features in such a way, and still showcasing so much effort on privacy and data security, as a way to show governments and federal agencies that they can without sacrificing privacy:

Now, why to do it is because, as you said, this is something that will provide that detection capability while preserving user privacy. We’re motivated by the need to do more for child safety across the digital ecosystem, and all three of our features, I think, take very positive steps in that direction. At the same time we’re going to leave privacy undisturbed for everyone not engaged in the illegal activity.

Neuenschwander was also asked about law enforcement potentially using the framework in place to potentially use the CSAM-scanning feature to try and discover other content in photo libraries. He said it’s not going to undermine Apple’s efforts with encryption:

It doesn’t change that one iota. The device is still encrypted, we still don’t hold the key, and the system is designed to function on on-device data… The alternative of just processing by going through and trying to evaluate users data on a server is actually more amenable to changes [without user knowledge], and less protective of user privacy… It’s those sorts of systems that I think are more troubling when it comes to the privacy properties — or how they could be changed without any user insight or knowledge to do things other than what they were designed to do.

The head of privacy at the company says that for folks not partaking in illegal activity, Apple is not gaining any additional information about them. Nothing is changing on that front. These new security features are specifically meant to track known CSAM and try to limit its spread.

These new features are going to launch later this year, after Apple releases iOS 15, iPadOS 15, watchOS 8, and macOS 12 Monterey to the public.

Go read the full interview at TechCrunch for more info. And be sure to check out Apple’s FAQ regarding the new features.