Back in August, with about as little fanfare as possible, Apple announced a trio of new features coming to iOS 15, iPadOS 15, macOS 12 Monterey, and watchOS 8. There are three in total, each of which fall under the company’s new, concerted efforts to help protect against child abuse and sexual exploitation. And while the features were seen as a positive move in general, when it came to the specifics of one of the new features, there was a lot of pushback. And apparently it worked.

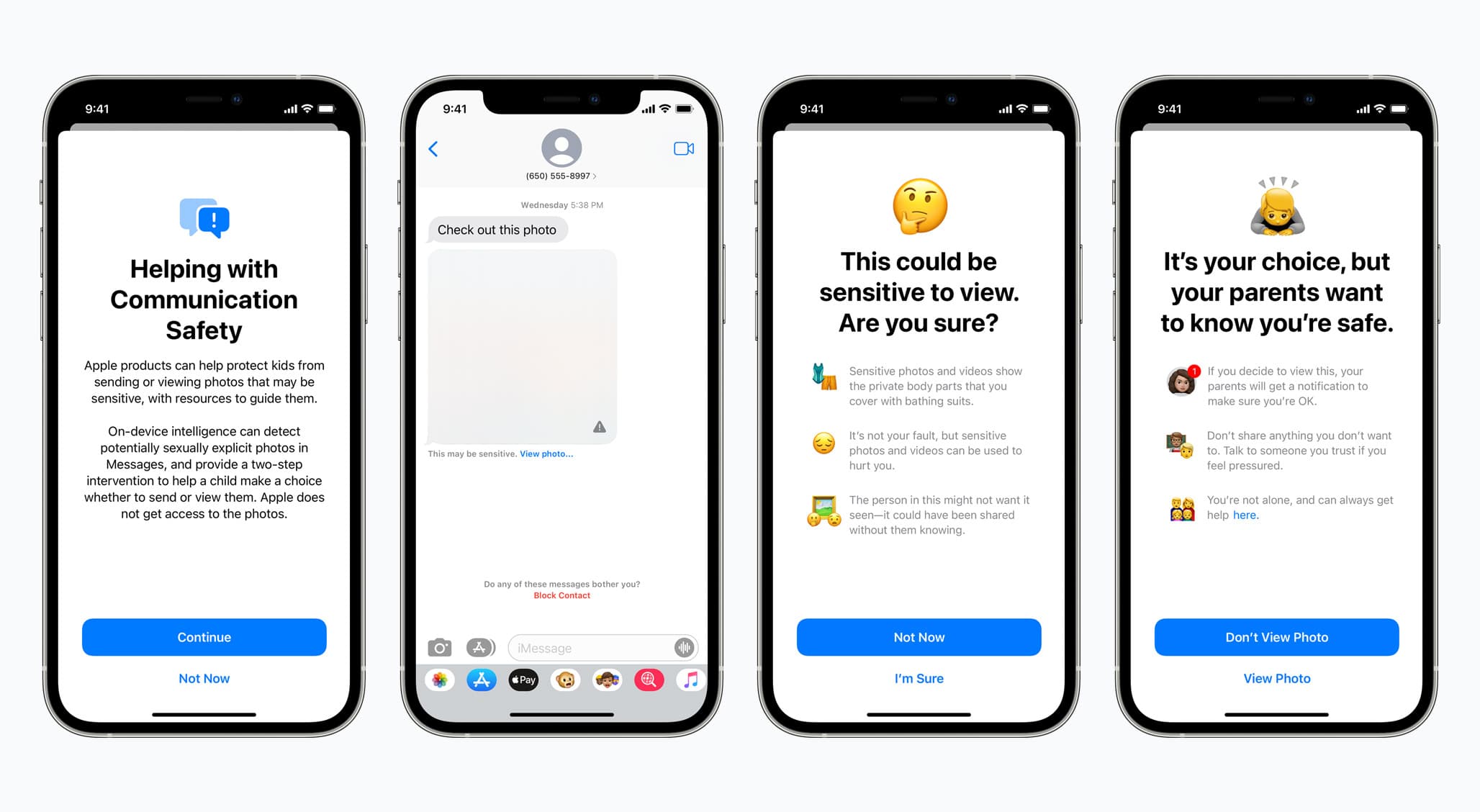

Today, Apple has announced that it is delaying the rollout of its child safety features. That includes more information regarding child sexual exploitation, the ability to monitor when a young person sends or receives potentially explicit photos, and the child sexual abuse material (CSAM) detection tool — which scans the iCloud Photo Library of users to search for known hashes tied directly to CSAM. It’s this latter feature that has received the majority of the ire from outside resources and agencies. Some believe it’s a slippery slope, despite Apple’s best efforts to praise the new features, try to show how they can’t be abused by Apple or anyone else, and otherwise try to sell these new features as an overall good thing.

But, in an effort to appease those who have taken umbrage with the new feature(s), Apple has confirmed that it is delaying the rollout to “take additional time” to “make improvements. You can see Apple’s full statement on the delay below.

Apple’s statement:

Last month we announced plans for features intended to help protect children from predators who use communication tools to recruit and exploit them, and limit the spread of Child Sexual Abuse Material. Based on feedback from customers, advocacy groups, researchers and others, we have decided to take additional time over the coming months to collect input and make improvements before releasing these critically important child safety features.

As noted in the statement, the plan to look over these features and adjust as necessary will take some time, with Apple giving itself a few months at least to work things out. There’s no new timetable for a launch, of course, and it would stand to reason that Apple will probably be even more transparent about these features moving forward. But only time will tell on that front.

As a refresher, Apple says it designed its CSAM detection tool, which, again, scans an iCloud Photo Library for known hashes, with privacy for the end user in mind. Here’s how the company put it when it initially announced this particular element of the new child safety features:

Apple’s method of detecting known CSAM is designed with user privacy in mind. Instead of scanning images in the cloud, the system performs on-device matching using a database of known CSAM image hashes provided by NCMEC and other child safety organizations. Apple further transforms this database into an unreadable set of hashes that is securely stored on users’ devices.

Before an image is stored in iCloud Photos, an on-device matching process is performed for that image against the known CSAM hashes. This matching process is powered by a cryptographic technology called private set intersection, which determines if there is a match without revealing the result. The device creates a cryptographic safety voucher that encodes the match result along with additional encrypted data about the image. This voucher is uploaded to iCloud Photos along with the image.

So, what do you make of Apple’s decision? Think it’s the right move?