Facebook today announced it had begun using artificial intelligence (AI) to identify people on its platform who may be at risk of committing suicide, offering them the option of speaking with a crisis helpline through its Messenger application.

Facebook’s AI algorithm works by spotting warning signs in users’ posts and the comments their friends leave in response. The new tool is being tested only in the US at present.

The new suicide prevention tools improve upon Facebook’s existing suicide-prevention features which have been available on the service for more than a decade.

Key enhancements include the use of artificial intelligence and real-time Facebook Live integration. Facebook Live integration should help identify and help those who would stream their suicide on the platform in real-time.

Here’s how it’s supposed to work.

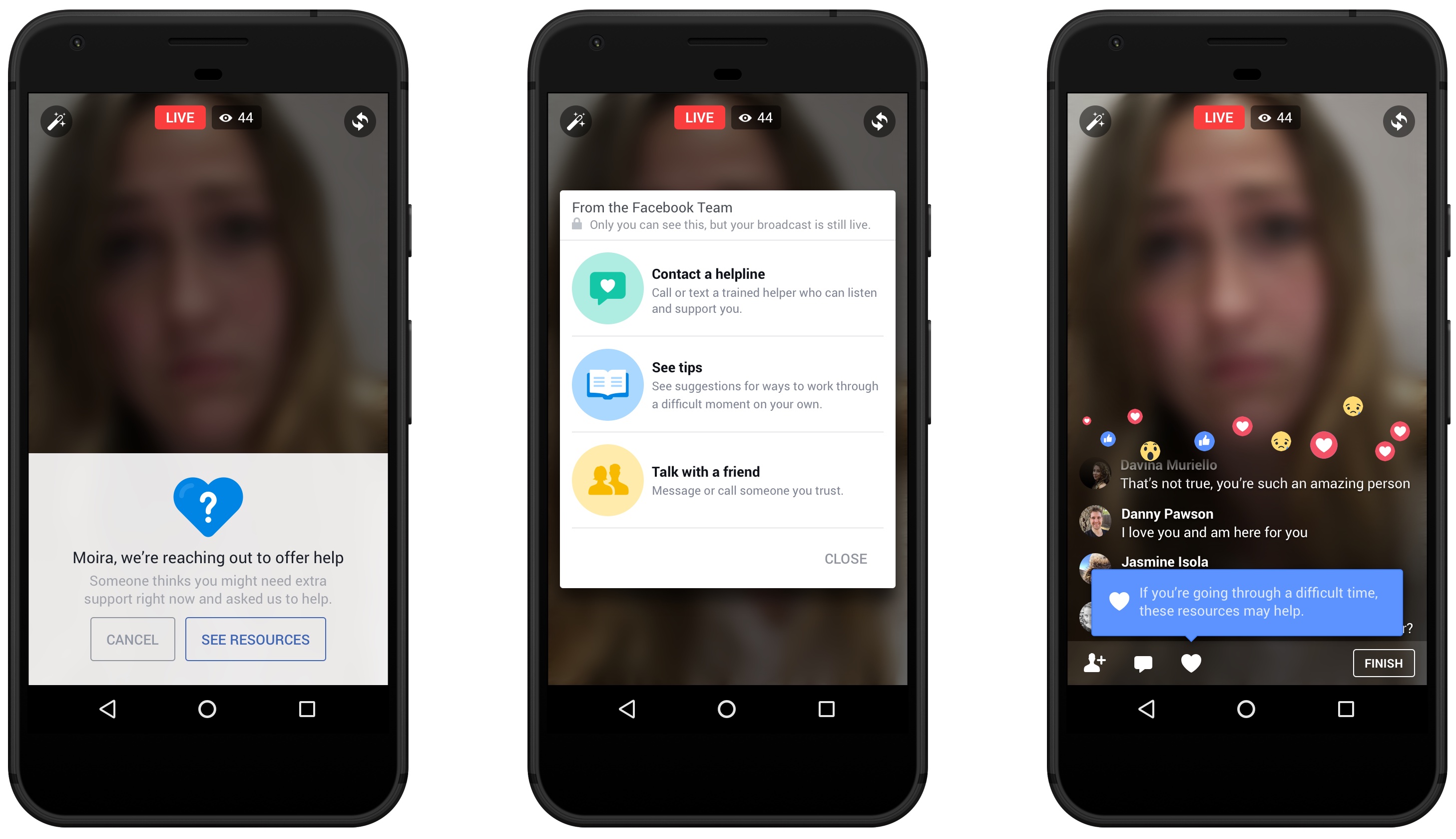

If you find yourself watching a live video of a person who may be at risk of killing themselves and seem to be willing to live-stream their suicide on the platform, Facebook now provides the tools to help them while they are broadcasting rather than wait until their video has been reviewed some time later.

The person sharing a live video will be presented with a set of resources on their screen allowing them to reach out to a friend, contact a help line or see tips.

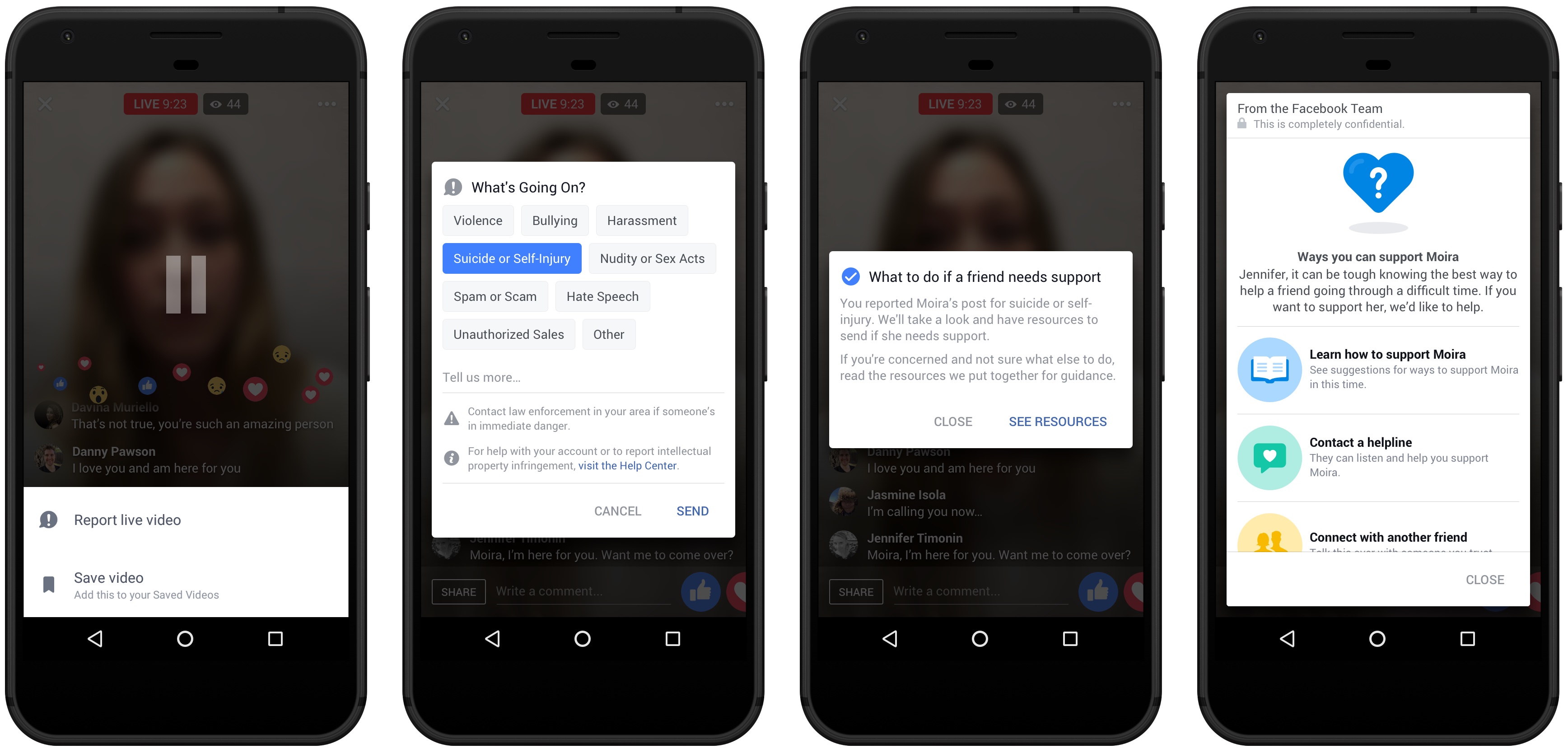

Facebook is also testing a new AI-powered pattern recognition in posts previously reported for suicide, providing the option to report a post flagged by the system as being about potential suicide or self injury.

“We’re also testing pattern recognition to identify posts as very likely to include thoughts of suicide,” said Facebook.

There is one death by suicide in the world every 40 seconds, and suicide is the second leading cause of death for 15-29 year olds. Visit Facebook’s Help Center for information about how to support yourself or a friend.

Source: Facebook