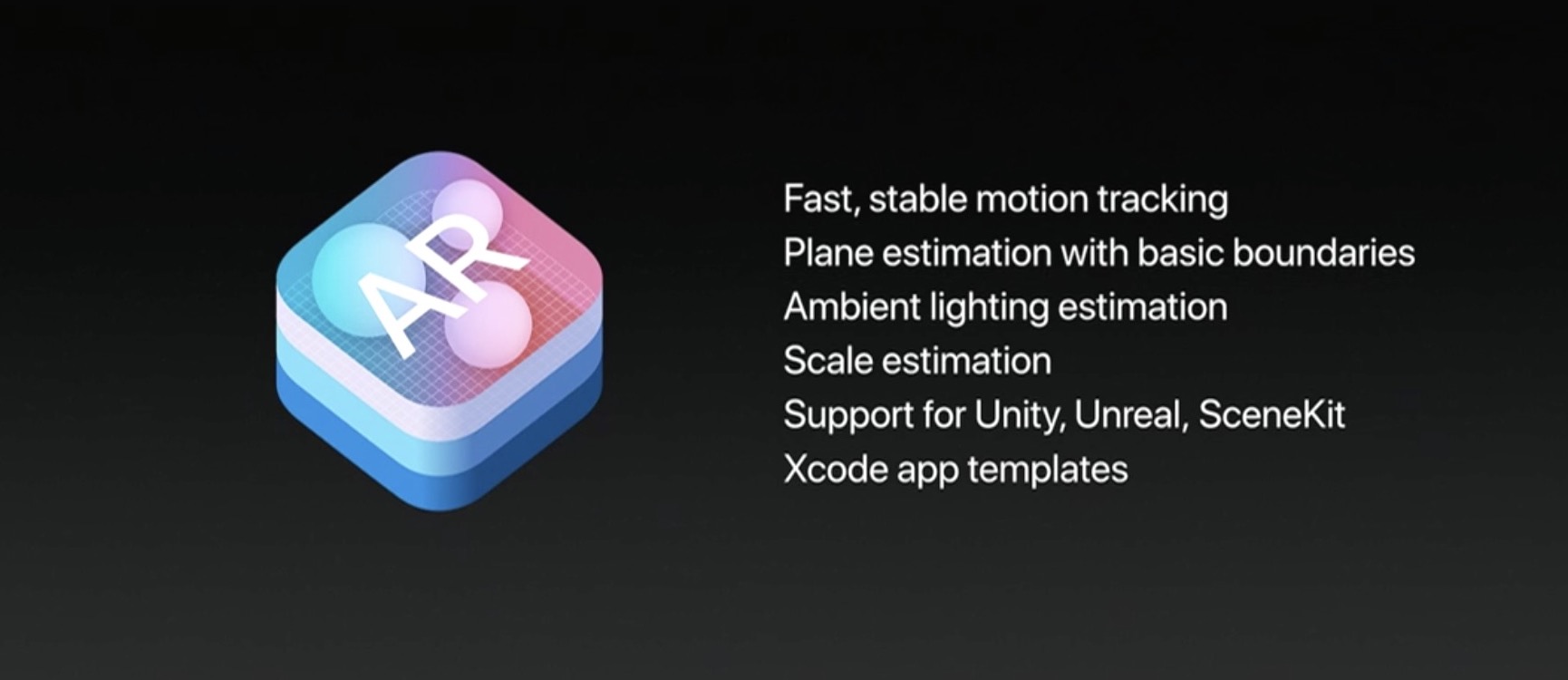

One of the best aspects of ARKit, Apple’s new framework for building augmented reality apps, is the fact that it does all the incredibly complex heavy lifting like detecting room dimensions, horizontal planes and light sources, freeing up developers to focus on other things.

ARKit analyzes the scene presented by the camera view, in real time.

Combined with sensor data, it is able to detect horizontal planes, such as tables and floors, as well as track and place objects on smaller feature points with great precision.

And because it uses the camera sensor, ARKit can accurately estimate the total amount of light available in a scene to apply the correct amount of lighting to virtual objects.

First, check out this demo from Tomás Garcia.

Children’s bedtime stories will never be the same come this fall!

Another developer has put together a quick demo showing off his AI bot, named “Pepper”.

According to the video’s description:

I’ve been working on an AI bot for a while now. To be short, it’s like V.I.K.I in the movie “I, Robot”. With the help of ARKit, I was able to bring it close to a real life assistant.

Due to obvious reasons, I’m not demonstrating her functionality in this video. So I ended up showing you guys how easy and simple it is to bring 3D models to life with Apple’s new framework.

These videos clearly demonstrate how easy ARKit makes it for developers to match the shadows of their virtual objects to lighting conditions in the real world.

Here are some additional ARKit-enabled demos.

ARKit requires A9 or A10 processors, meaning ARKit apps will require an iPhone 6s or newer or one of the latest iPad Pro models, either the 9.7-inch or the 10.5-inch one.

If anything, these videos demonstrate just how easy Apple has made it to put together an AR app. Are you looking forward to ARKit, and why? Leave a comment below to let us know.