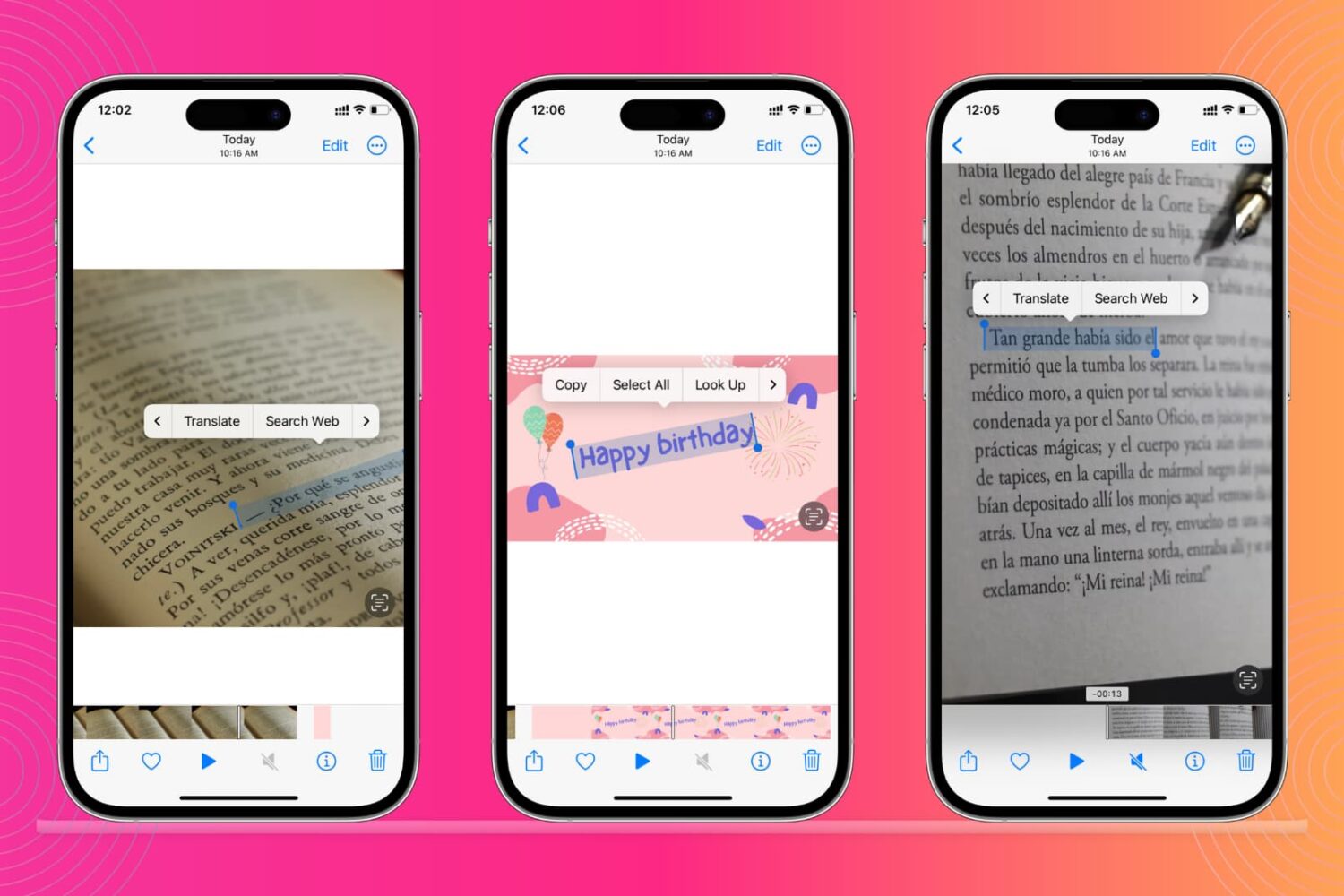

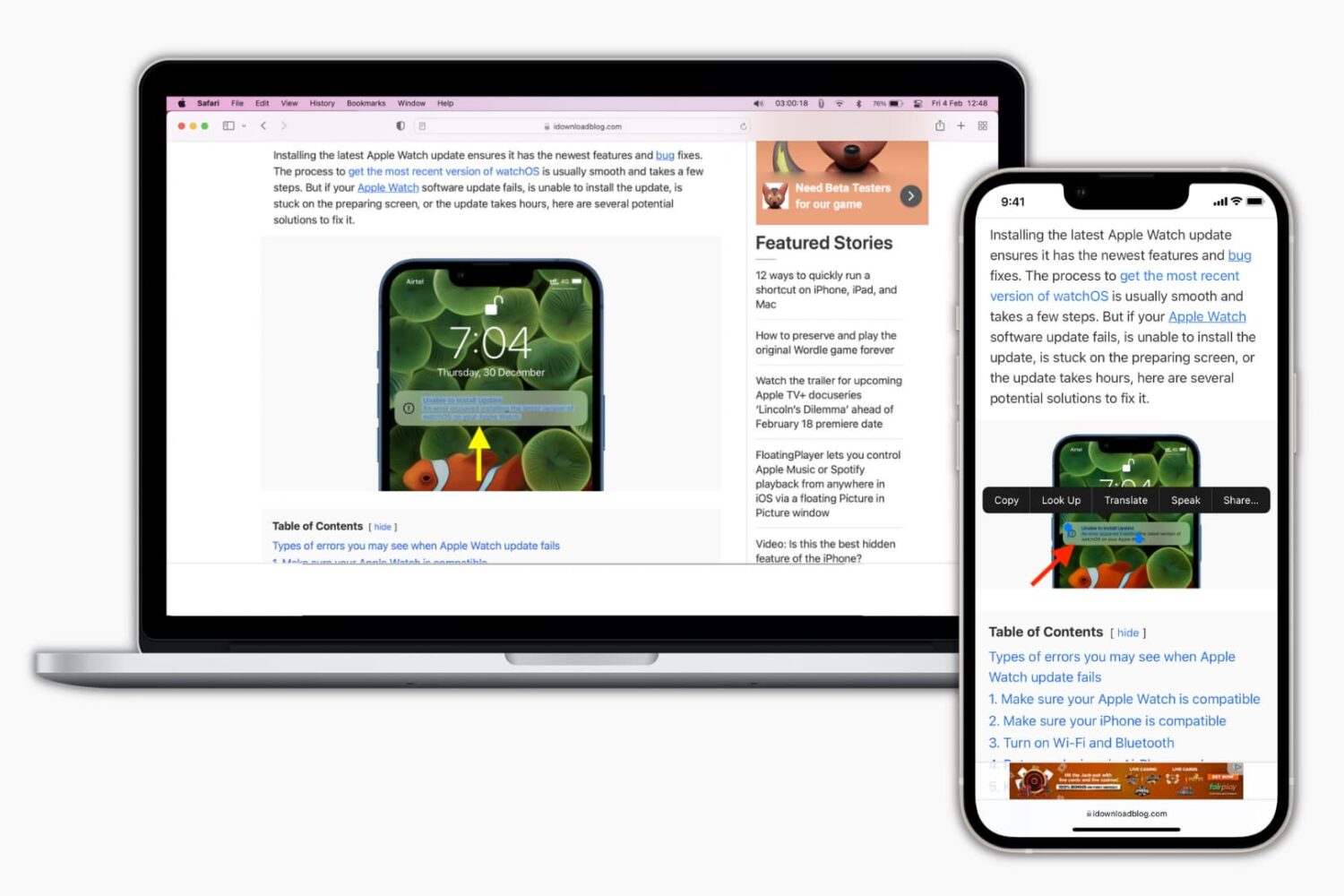

Live Text is one of those cool, but somewhat niche features in iOS 15 that lets users interact with text in an image like it was text in a document. The feature works in images, videos, and even while looking through the camera lens in real time.

This new jailbreak tweak enables Live Text on unsupported iOS 15 devices