After an iOS update with a Siri grading opt-in drops, Apple will continue reviewing computer-generated transcriptions of your Siri audio requests regardless of your opt-in status.

This was announced in a support document published on Apple’s website yesterday shortly after the company issued a press release in which it apologized over Siri privacy concerns. “We will continue to use computer-generated transcripts to help Siri improve,” wrote the firm.

The only way to stop that will be to disable Siri entirely.

By default, Apple will no longer retain audio of your Siri requests, starting with a future software release in fall 2019. Computer-generated transcriptions of your audio requests may be used to improve Siri. These transcriptions are associated with a random identifier, not your Apple ID, for up to six months. If you do not want transcriptions of your Siri audio recordings to be retained, you can disable Siri and Dictation in Settings.

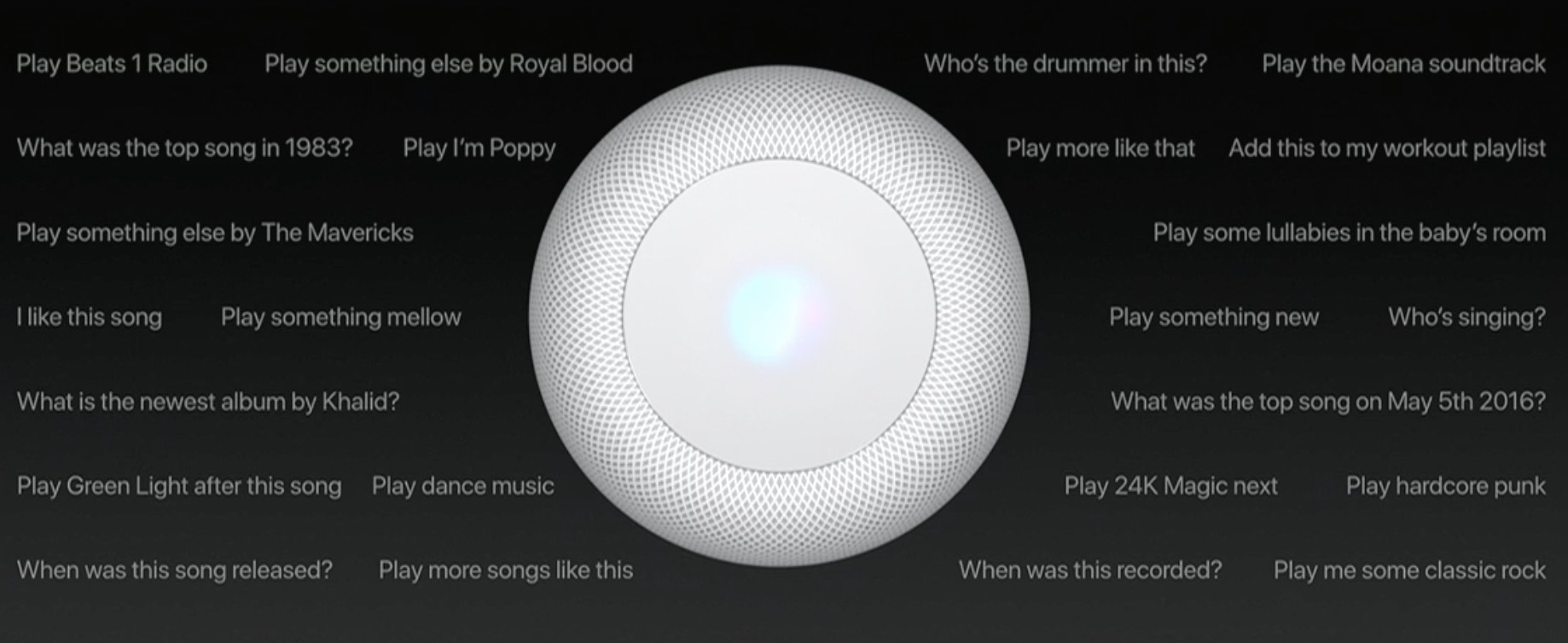

Following a recent report in The Guardian newspaper in which a whistleblower explained that Apple’s contractors were tasked with grading Siri requests by listening to snippets of customers’ interactions with the personal assistant, the iPhone maker swiftly shut down the program and conducted a thorough review of its policies.

“We hope that many people will choose to help Siri get better, knowing that Apple respects their data and has strong privacy controls in place,” the company wrote. If you choose to participate, you’ll be able to opt out at any time.

As a result of the privacy scandal, Apple has made the following changes:

- Audio recordings: Audio recordings of your Siri interactions will no longer be retained on servers for six months unless you opt in to Siri grading.

- Opt-in: People who would like to help improve Siri will be able to opt in to let Apple use the audio samples of their requests to improve the digital assistant.

- Human grading: Only after opting in will Apple employees be permitted to listen to audio samples of your Siri interactions.

In other words, if you opt-in to help Apple improve Siri you’ll enjoy elevated privacy measures because any recordings of your conversations with Siri will no longer be retained. It’s worth mentioning that your Siri recordings for the first six months were never tied to your Apple ID.

Siri uses a random identifier — a long string of letters and numbers associated with a single device — to keep track of data while it’s being processed, rather than tying it to your identity through your Apple ID or phone number — a process that we believe is unique among the digital assistants in use today. For further protection, after six months, the device’s data is disassociated from the random identifier.

Apple currently keeps these recordings for six months before removing the random identifier from a copy that it could keep for two years or more. As we said, they’ll continue using computer-generated transcripts to improve Siri regardless of your opt-in status.

People who opt in can rest assured that their recordings will no loner be listened to and analyzed by outside contractors because that job will instead be handled by Apple’s own employees who will now have access to far less data.

We are making changes to the human grading process to further minimize the amount of data reviewers have access to so that they see only the data necessary to effectively do their work. For example, the names of the devices and rooms you setup in the Home app will only be accessible by the reviewer if the request being graded involves controlling devices in the home.

Apple hopes that “many people” will choose to help Siri get better knowing that Apple “respects their data and has strong privacy controls” in place. The firm claims it had reviewed fewer than 0.2 percent of Siri requests and computer-generated transcripts before the grading program was halted. Furthermore, it acknowledged that any inadvertent recordings will now be deleted as soon as they’re identified.