Apple reportedly will not rely on the power of the cloud for the initial batch of the rumored new artificial intelligence (AI) features coming with iOS 18.

Many AI-powered features coming to iOS 18 this year “will work entirely on the device,” Bloomberg’s Mark Gurman reported and AppleInsider corroborated.

Asked in the Q&A section of the recent edition of his Power On newsletter for Bloomberg how much iOS 18’s initial new AI feature will rely on the cloud, Gurman replied that no server-side processing will be required for those.

If he’s correct, then the basic AI features in iOS 18—such as text analysis and summarization and response generation features—won’t require an internet connection. This likely won’t be true for advanced AI capabilities.

The rumored generative AI features in iOS 18

Apple is rumored to bring generative AI features to the Siri digital assistant, Apple Music and productivity apps like Pages and Keynote. Large-language models would enable a more conversational Siri to chain multiple commands to carry out complex tasks. This is one of the features that might work offline.

Pages would get a writing assistant, while Keynote might automatically create slide decks from prompts. You can expect other generative AI features elsewhere throughout iOS 18, namely the Photos and Camera apps that already heavily use ML-powered object, subject and scene recognition.

However, the more advanced features in subsequent iOS 18 updates will probably require the power of the cloud. Apple is rumored to be licensing Google’s Gemini and OpenAI’s AI technology instead of developing its large language model.

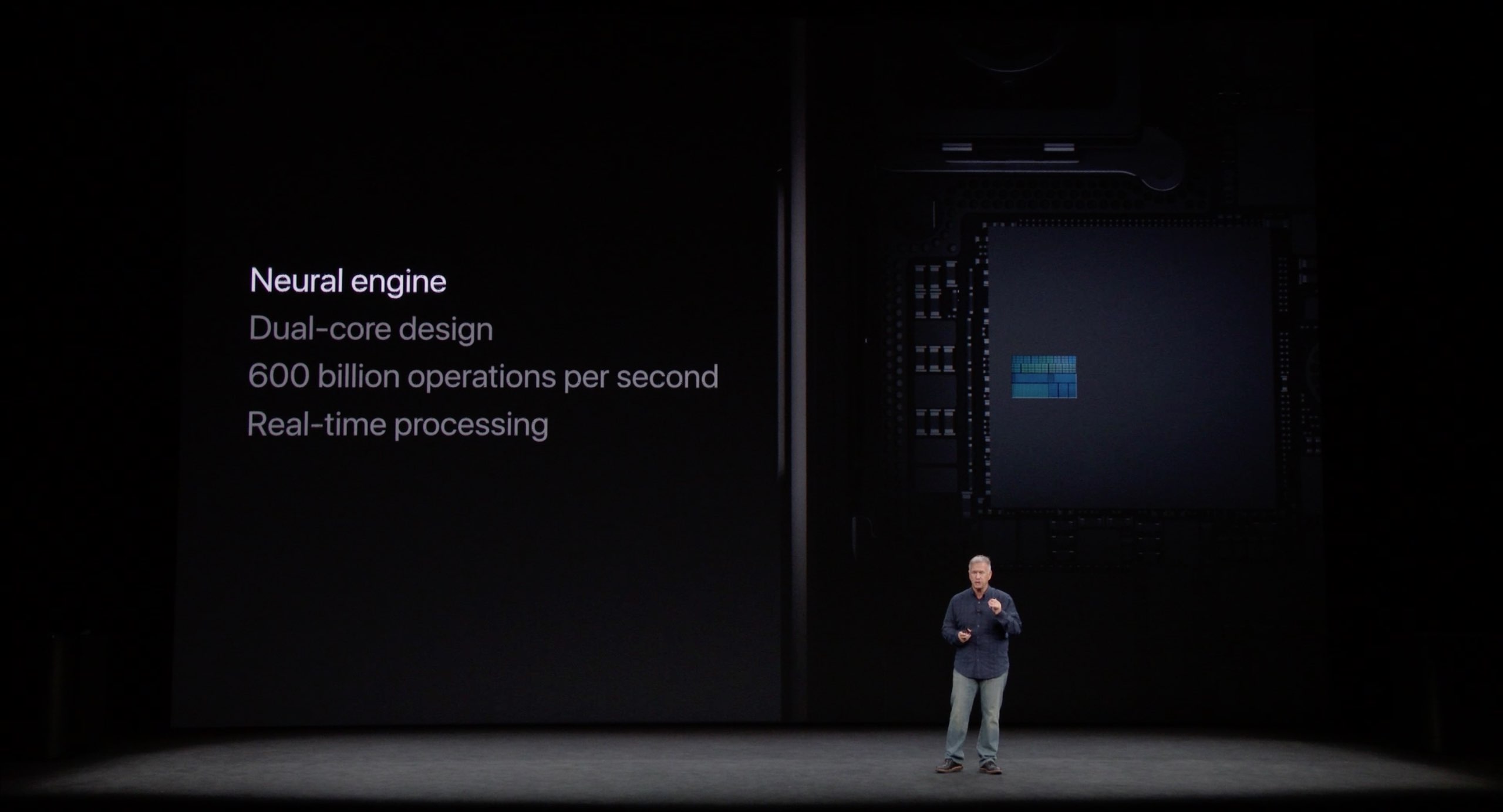

Apple may beef up the Neural Engine for iOS 18’s AI

Apple already processes some Siri requests on-device, but the assistant still requires internet connectivity for the vast majority of commands.

As iOS 18’s AI features are much more demanding, Apple could significantly improve the Neural Engine by adding “significantly” more processing cores.

In comparison, the fastest Neural Engine in the M3 chips features sixteen cores. The M1 Ultra and M2 Ultra chips in the Mac Studio and Pro use 32-core Neural Engines which, however, don’t perform as well as the latest Neural Engine in the M3 chips.