Apple under Tim Cook’s leadership has turned protecting user privacy into one of its missions. The company takes a holistic approach to security and privacy that starts at the semiconductor level. British publication Independent today published a rare insight into a secretive facility on Apple’s campus in which expensive machines are abusing in-house designed chips to see whether they can withstand hacking and whatever other types of assault anyone might try on them when they make their way into new iPhones.

Andrew Griffin, writing for Independent:

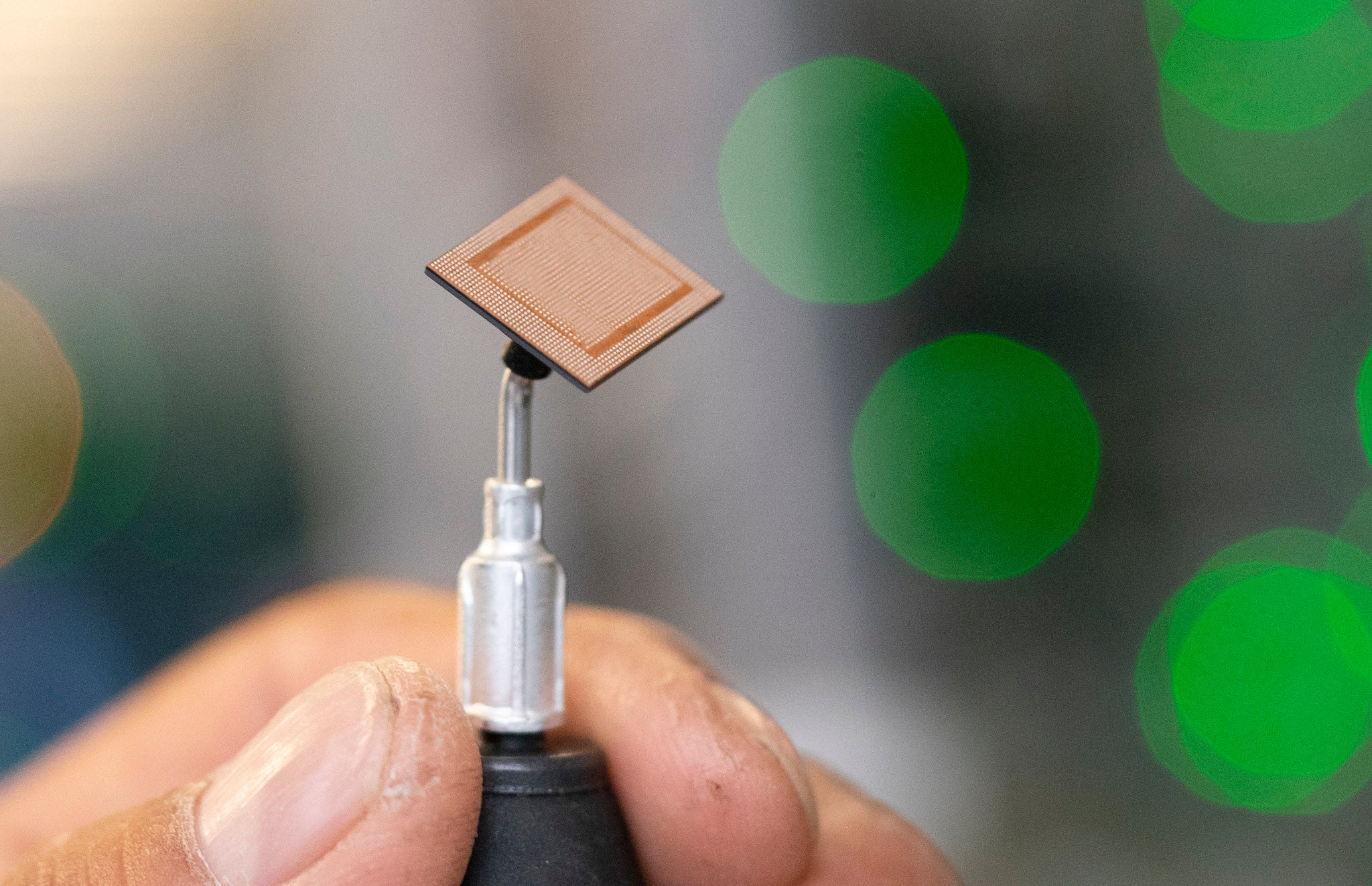

In a huge room somewhere near Apple’s glistening new campus, highly advanced machines are heating, cooling, pushing, shocking and otherwise abusing chips. Those chips – the silicon that will power iPhones and other Apple products of the future – are being put through the most grueling work of their young, secretive lives. Throughout the room are hundreds of circuit boards, into which those chips are wired – those hundreds of boards are placed in hundreds of boxes, where these trying processes take place.

The main focus is testing protections against hacking.

Those chips are here to see whether they can withstand whatever assault anyone might try on them when they make their way out into the world. If they succeed here, then they should succeed anywhere; that’s important, because if they fail out in the world then so would Apple. These chips are the great line of defense in a battle that Apple never stops fighting as it tries to keep users’ data private.

And this…

The chips arrive here years before they make it into this room. The silicon sitting inside the boxes could be years from making it into users’ hands. There are notes indicating what chips they are, but little stickers placed on top of them to stop us reading them.

According to Craig Federighi, Apple’s SVP of Software Engineering, privacy considerations are at the beginning of the process, not the end. “When we talk about building the product, among the first questions that come out is: how are we going to manage this customer data?,” he told the publication.

Protecting user data is crucial in China where law requires that data is stored locally.

Federighi says because the data is encrypted, even if it was intercepted – even if someone was actually holding the disk drives that store the data itself – it couldn’t be read. Only the two users sending and receiving iMessages can read them, for example, so the fact they’re sent over a Chinese server should be irrelevant if the security works. All they should be able to see is a garbled message that needs a special key to be unlocked.

Apple’s solution to privacy leans heavily on Differential Privacy techniques for anonymizing and minimizing user data ass well as on-device processing, which is why the company has been spending big bucks on custom silicon development. That is also why the last two Apple chip generations include hardware-accelerated machine learning via the Neural Engine.

Freight explains:

Last fall, we talked about a big special block in our our chips that we put in our iPhones and our latest iPads called the Apple Neural Engine – it’s unbelievably powerful at doing AI inference. And so we can take tasks that previously you would have had to do on big servers, and we can do them on device. And often when it comes to inference around personal information, your device is a perfect place to do that: you have a lot of that local context that should never go off your device, into some other company.

Is this something other companies might adopt?

I think ultimately the trend will be to move more and more to the device because you want intelligence both to be respecting your privacy, but you also want it to be available all the time, whether you have a good network connection or not, you want it to be very high performance and low latency.

Apple even created health and fitness labs to ensure your health data is safe:

To respond to that, Apple created its fitness lab. It is a place devoted to collecting data – but also a monument to the various ways that Apple works to keep that data safe.

Data streams in through the masks that are wrapped around the faces of the people taking part in the study, data is collected by the employees who are tapping their findings into the iPads that serve as high-tech clipboards, and it is streaming in through the Apple Watches connected to their wrists.

In one room, there is an endless swimming pool that allows people to swim in place as a mask across their face analyzes how they are doing so. Next door, people are doing yoga wearing the same masks. Another section includes huge rooms that are somewhere between a jail cell and a fridge, where people are cooled down or heated up to see how that changes the data that is collected.

All of that data will be used to collect and understand even more data, on normal people’s arms. The function of the room is to tune up the algorithms that make the Apple Watch work and by doing so make the information it collects more useful: Apple might learn that there is a more efficient way to work out how many calories people are burning when they run, for instance, and that might lead to software and hardware improvements that will make their way onto your wrist in the future.

Privacy protections encompass Apple’s own employees:

Even as those vast piles of data are being collected, it’s being anonymized and minimized. Apple employees who volunteer to come along to participate in the studies scan themselves into the building – and then are immediately disassociated from that ID card, being given only an anonymous identifier that can’t be associated with that staff member.

Apple, by design, doesn’t even know which of its own employees it is harvesting data about. The employees don’t know why their data is being harvested, only that this work will one day end up in unknown future products.

At the heart of all those privacy efforts is Secure Enclave, a cryptographic coprocessor embedded in the main chip that secures you fingerprints, cryptographic keys and facial/payment data while taking care of on-the-fly disk encryption and decryption.

Every version of the Secure Enclave coprocessor Apple has so far created sports its own kernel and firmware that establish the hardware root of trust. Going technical, the embedded coprocessor runs a Secure Enclave OS, which is based on an Apple-customized version of the L4 microkernel. This software is signed by Apple, verified by the Secure Enclave Boot ROM and updated through a personalized software update process.

The Secure Enclave is also responsible for processing fingerprint and facial scans from the Touch ID and Face ID sensors, determining if there’s a match, and then enabling access or purchases on behalf of the user. Secure Enclaves embedded in Apple A12 Bionic and Apple S4 chips powering latest phones, tablets and watches from the company are paired with a secure storage integrated circuit (IC) for anti-replay counter storage.

This yields the following benefits, according to Apple’s iOS Security Guide document:

The secure storage IC is designed with immutable ROM code, a hardware random number generator, cryptography engines and physical tamper detection. To read and update counters, the Secure Enclave and storage integrated circuitry employ a secure protocol that ensures exclusive access to the counters.

Anti-replay services on the Secure Enclave are used for revocation of data over events that mark anti-replay boundaries including, but not limited to passcode change, Touch ID/Face ID enable/disable, Touch ID fingerprint add/delete, Face ID reset, Apple Pay card add/remove and Erase All Content and Settings in iOS.

Secure Enclave is physically walled off from the rest of the system—communication between it and the main processor is isolated to an interrupt-driven mailbox and shared memory data buffers. All that iOS sees is the result of Secure Enclave operators, like a fingerprint match.

No Secure Enclave data is ever transmitted into the cloud.

Top image: An engineer works in one of Apple’s labs testing current and future generation chips in Cupertino, California. Credit: Brooks Kraft/Apple.