Now that Apple is pushing a number of differing iPhones to a wider range of countries, it might be wise if Siri understood languages spoken outside of North America and Europe. To help fine-tune the regional differences in language, Apple has filed for a patent on integrating geolocation with speech recognition.

The filing, published by the U.S. Patent and Trademark Office last Thursday, seeks to use location clues to enhance Siri, as well as speech to text services available with the newly-introduced iPhone 5s and iPhone 5c…

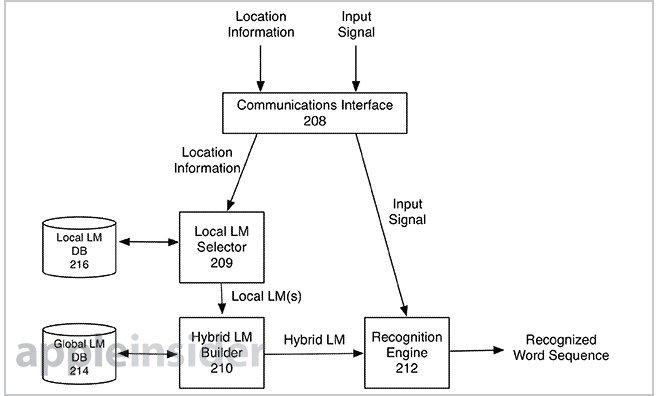

With the sleep-inducing title ‘Automatic input signal recognition using location based language modeling,’ the speech-recognition patent (via AppleInsider) hopes to create a system where a speaker’s location can improve the likelihood of a command or question being understood.

Currently, Apple’s speech recognition can parse local language, but does not too so well when the difference is small, such as between two geographic regions. Instead, the speaker’s specific location is used to better define the speech.

As an example, Apple provides the words “goat hill”.

At the moment, the speech recognition would fail to understand, instead thinking the person meant “good will.” However, adding geolocation would determine there is a nearby Goat Hill store and give that meaning more weight.

The patent was first filed in 2012.